By Alexander Serechenko, OneTick LLM Team Lead, and Peter Simpson, OneTick Product Owner

Developers and analysts working with financial firms face a common hurdle: navigating vast amounts of trade and market data scattered across internal systems. Traditional search methods, unfortunately, are often inadequate for this specialized domain, failing on everything from a simple typo (like misspelling "average") to complex, natural language questions.

To overcome these barriers and accelerate time-to-insight, OneTick has implemented specialized AI capabilities focused on Vector-Based Search and Retrieval-Augmented Generation (RAG).

The Limitations of Keyword Search

Traditional keyword-based search is brittle and easily broken. For complex queries or long sentences written in natural language, this method struggles because it attempts to grab all the words and find them individually on pages, often providing non-related results and rendering the search "quite useless".

The Power of Semantic Similarity (Vector-Based Search)

The solution lies in leveraging semantic similarity search, which uses Large Language Models (LLMs) to understand the meaning and context of a query.

- Embedding Content: Documentation page content is converted into a vector representation (embedding). Texts that have related meaning (e.g., all pages discussing aggregation on average) are placed close to each other in this vector space.

- Matching Queries: When a user asks a question, its semantic meaning is also converted into a vector. The search then returns the documentation pages that have the closest vector match to the user's query.

- Accuracy and Speed: This approach successfully returns relevant documentation pages, even when users type complex natural language questions like, "How do I calculate a period VWAP for my trades," by returning relevant results including an AI-generated summary and a sample code snippet.

The specialized team chose the OpenAI GPT 4.1 large embedding model after conducting detailed evaluations that assessed not only accuracy and precision but also performance metrics.

Validated Results and Enhanced Reliability

To ensure the reliability of this new approach, extensive experiments were conducted using real user queries, which were categorized into simple (e.g., searching for a function name) and complex (natural language questions) queries.

- For simple queries (searching for particular entity names), the vector search achieved success in returning the expected documentation page in the first position 99% of the time. Even when manipulating the query by introducing typos or omitting parts (like "otp."), the expected page was still found in the first place in ~80% of cases.

- For complex natural language queries collected from real users, the results were even higher: the expected page was in the first position ~80% of the time and was represented in the top ten 100% of the time.

Retrieval-Augmented Generation (RAG) for Context-Aware Answers

The vector-based search is the foundation for the next level of natural language assistance: Retrieval-Augmented Generation (RAG). This mechanism ensures that the LLM generates answers grounded strictly in verified organizational knowledge.

The RAG process works as follows:

- The system receives a user query.

- It uses vector search to retrieve relevant documents (the "context").

- An LLM (specifically the OpenAI GPT-4.1 model is relied upon for generating the main answer) is instructed to use only these documents to provide a summary answer.

- To avoid "made up answers" or hallucinations, the system is implemented with advanced prompting techniques and the lowest temperature, set to zero.

Real-World Applications and Future Development

This technology is currently deployed to replace the original keyword search functionality in the official public documentation search.

Furthermore, the technology is leveraged internally, where it helps engineers conduct complex searches, such as finding old Jira tickets simply by describing the ticket's content.

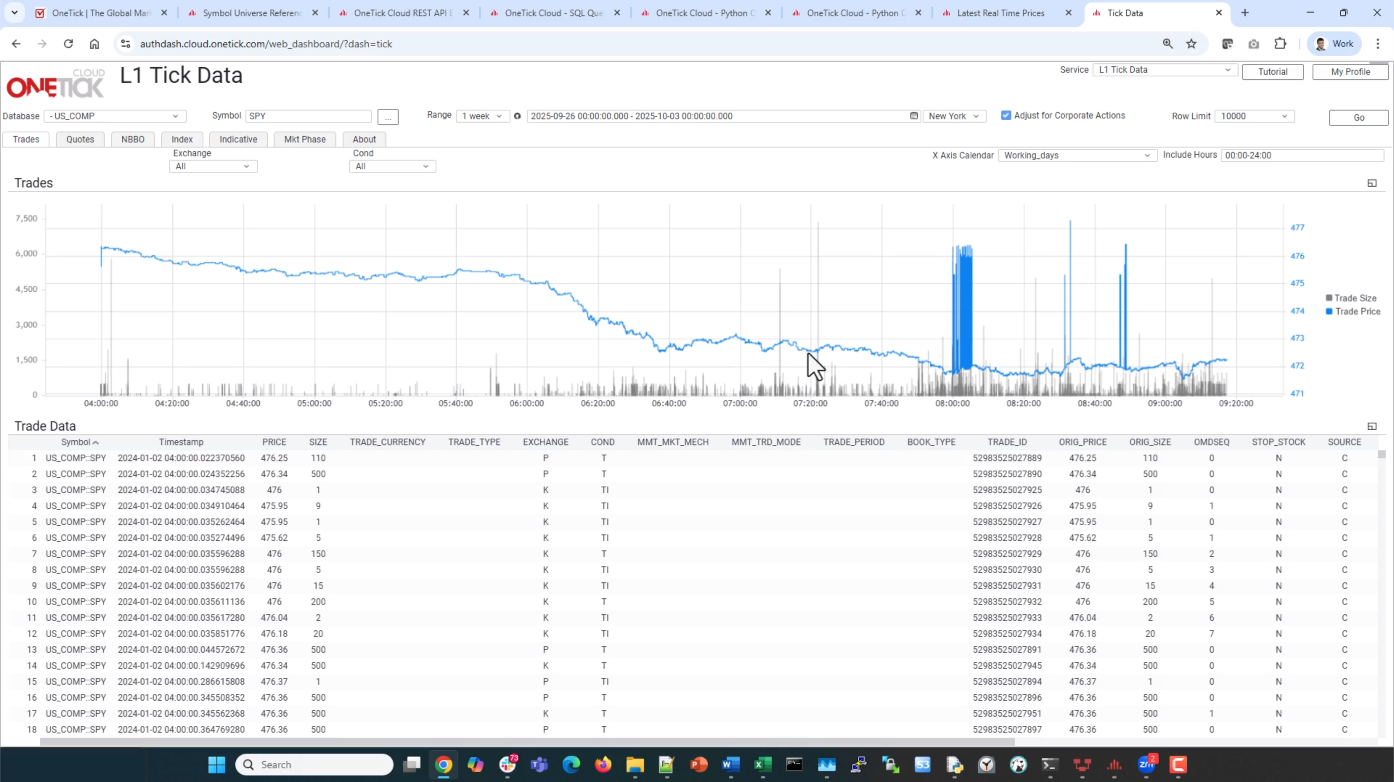

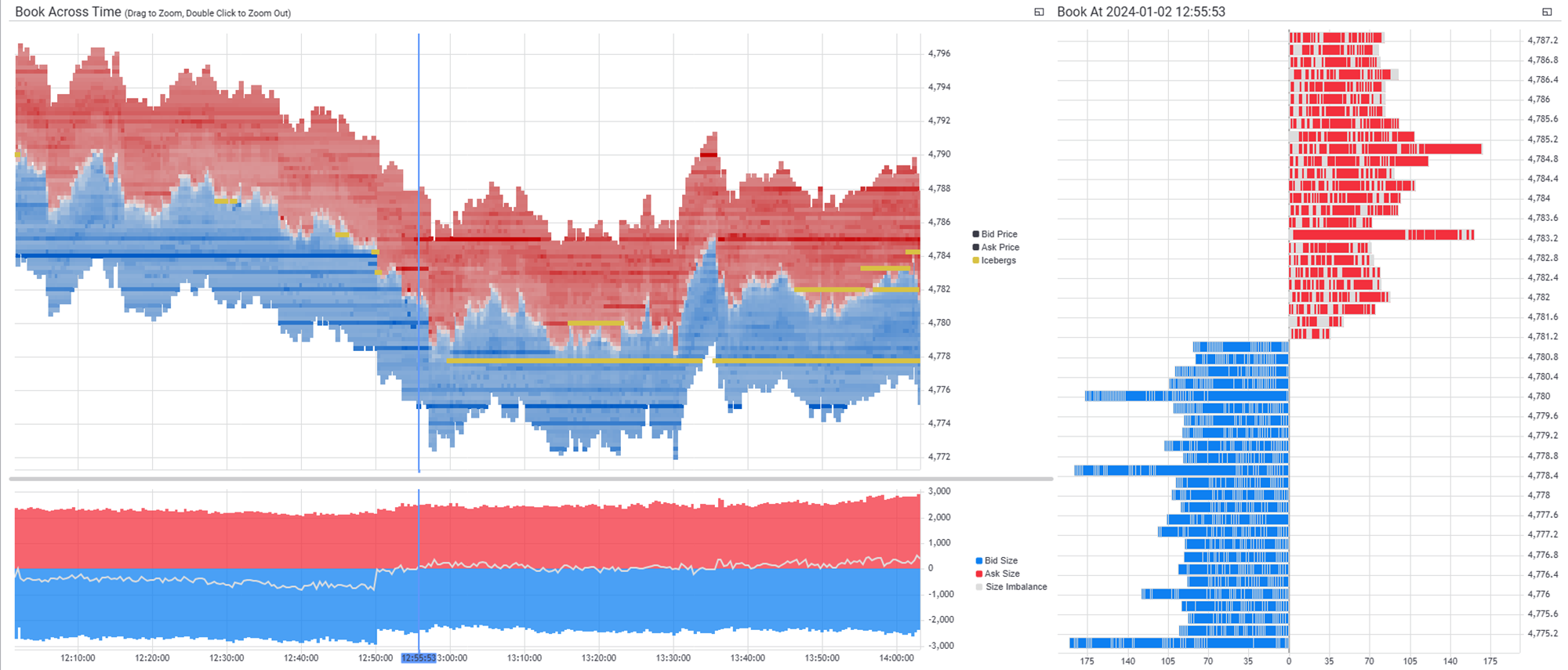

Looking ahead, the team is working on a "natural language query designer" (an internal ongoing project). This future tool is intended to be capable of receiving a natural language query, possessing knowledge about financial analytics, and then writing and executing code to query the OneTick database, thereby accelerating financial data analysis.

This implementation of specialized AI tools serves as an Engineering Knowledge Companion, acting as a critical bridge between stored organizational knowledge and natural conversation, helping engineers get immediate, accurate, and context-aware answers without relying on manually searching multiple platforms.

To learn more about OneTick, please visit onetick.com, email info@onetick.com, or request a private demo here.

Best wishes,

Alexander Serechenko, OneTick LLM Team Lead

Peter Simpson, OneTick Product Owner